Multi-Agent Influence Diagrams for Governance Protocols

Governance attacks refer to scenarios where malicious actors exploit the governance structure of a decentralized protocol to manipulate decision-making processes to their benefit. In decentralized finance (DeFi) platforms, governance is typically controlled by token holders who vote on proposals that influence the protocol's future. These votes can affect factors like protocol parameters, upgrades, or treasury allocations.

In this blog, we will discuss the increasing threat of governance attacks in DeFi protocols, especially as decentralized systems grow in complexity.

Traditional approaches to securing governance systems—such as cryptographic and technical audits—are proving insufficient when it comes to dealing with incentive misalignments among agents (voters). This introduces the need for game-theoretic formal verification methods like MAIDs (Multi-Agent Influence Diagrams), which allow for a deeper analysis of agent behaviors and their potential to deviate from expected governance norms.

MAIDs offer a formal framework to model strategic interactions among agents. By analyzing these interactions, governance protocols can be better designed to prevent attacks that stem from misaligned incentives.

Example of Governance Attack on Compound

A whale, named Humpy, coordinated significant delegations of $COMP tokens to control voting. Humpy aimed to manipulate governance decisions to allocate $24 million worth of $COMP to a protocol he controlled, called goldCOMP, run by a group known as Golden Boys. This example demonstrates how governance tokens can be exploited to serve the interests of a few, often to the detriment of the protocol’s health and integrity.

In response to such attacks, MAIDs are introduced as a solution to model and mitigate these types of vulnerabilities by formally verifying incentive structures within governance systems.

Defining Incentive Misalignment in Governance

Incentive misalignment occurs when the goals of participants (agents) in a governance protocol are not aligned with the overall objectives of the protocol. This can lead to participants acting in ways that harm the protocol’s sustainability or security.

In decentralized governance, where decisions are made through voting systems, this misalignment can manifest in the form of governance attacks, where certain participants (often with large holdings) exploit the governance process for personal gain.

For example, an agent might use their voting power to pass proposals that benefit their own financial interests, even if those decisions are detrimental to the long-term health of the protocol. This creates an environment where the economic incentives for individual agents do not encourage behavior that supports the overall sustainability of the protocol.

Potential Solutions Using MAIDs

MAIDs allow for the modeling of strategic interactions between multiple agents, considering their individual incentives, decisions, and possible outcomes. By using MAIDs, it becomes possible to predict how agents might behave in a governance scenario and to design incentive structures that ensure alignment with the protocol’s objectives.

The underlying theory of MAIDs, developed by Koller and Milch, provides a structured way to represent the choices available to each agent and the influence those choices have on each other. This is especially valuable in DeFi governance, where multiple actors are interacting, each with their own objectives and strategies.

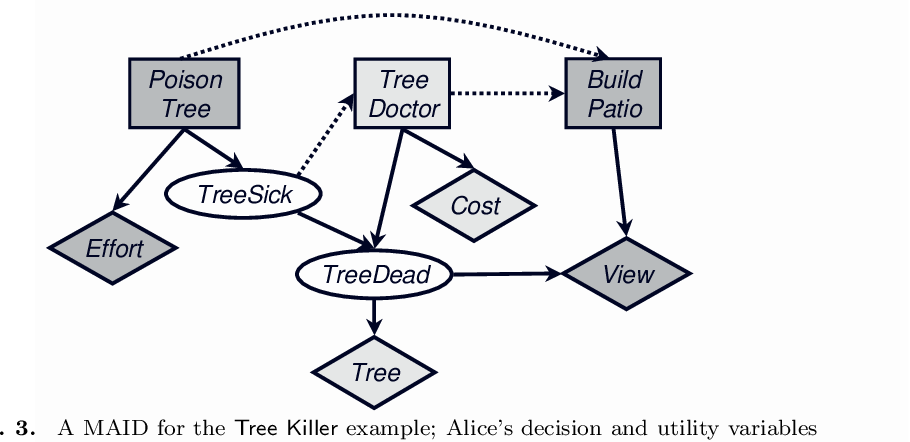

Tree Killer Example (Koller and Milch)

In this scenario, Alice wants to build a patio behind her house to have a clear view of the ocean. However, a tree in her neighbor Bob's yard blocks her view. Alice considers poisoning the tree to get rid of it, which would give her a better view but at a cost.

Bob, on the other hand, is unaware of Alice’s intentions but notices that his tree is sick and has the option of hiring a tree doctor to save it. The decision-making process of both Alice and Bob is influenced by several factors, such as the cost of poisoning the tree and the cost of hiring the tree doctor. The key takeaway here is that both parties have distinct incentives, and their actions are based on incomplete information about the other’s intentions.

This example illustrates how MAIDs can be used to model the decision-making process between agents with conflicting interests, providing a framework for understanding and potentially resolving misaligned incentives.

Representation of a MAID

A Multi-Agent Influence Diagram (MAID) is a formal representation used to model strategic interactions between multiple agents within a system. In the context of governance protocols, MAIDs help visualize and analyze how different participants (agents) in the governance process make decisions based on their individual incentives and the influence of other agents' decisions.

A MAID can be described as a tuple consisting of the following components:

- Set of Agents (A): These are the participants in the governance protocol. Each agent is an entity that makes decisions based on the information available to them and their own utility (incentives).

- Chance Variables (𝜒): These represent random or uncontrollable factors that can affect the outcome of decisions. In the governance context, chance variables might represent market conditions, token price fluctuations, or other environmental factors outside the direct control of agents.

- Decision Variables (D): These represent the decisions made by the agents in the system. In governance protocols, decision variables include voting choices or actions taken regarding governance proposals.

- Utility Variables (U): These represent the outcomes or payoffs that each agent expects to receive as a result of their decisions. Utility is based on how much an agent benefits from the outcome of their actions. For example, if an agent votes for a proposal that benefits them financially, their utility increases.

MAID Framework for Governance Protocols

In governance protocols, the interaction between these components can be quite complex. Each agent’s decisions are influenced by the chance variables, the decisions of other agents, and their expected utility from different outcomes. By using MAIDs, we can model this dynamic and analyze how different factors affect the behavior of agents.

For example, if there is a proposal to change a protocol’s parameters (such as collateral requirements), agents will make decisions based on their stake in the governance token, their risk tolerance, and the expected market conditions. MAIDs allow us to simulate these interactions and determine what strategies the agents are likely to adopt.

Importance of MAIDs in Governance Verification

MAIDs are particularly useful for governance protocols because they help address questions like:

- How will agents vote if the market is volatile?

- What happens if a malicious agent enters the system and attempts to disrupt governance?

- Can we design incentive structures that encourage agents to act in the best interest of the protocol?

By representing governance interactions using MAIDs, it becomes possible to formally verify that the incentive structure aligns the agents’ behavior with the protocol's overall objectives. This can help prevent situations where agents exploit governance for personal gain at the expense of the protocol’s long-term health.

A Simple Governance Game

Here the key assumption is that all agents in the system are homogeneous. This means that every agent behaves in the same manner when faced with similar situations. In a governance protocol, this could represent participants who own governance tokens and follow identical strategies for voting on proposals.

The assumption of homogeneous agents simplifies the analysis because it allows for the modeling of agent behavior in a standardized way. In real-world governance systems, while not all agents are truly homogeneous, this assumption can be useful for understanding the collective dynamics of participants who share similar incentives or voting power.

The governance system in this game revolves around a governance token, represented as $GOV. Each agent owns a certain amount of $GOV, which gives them the right to vote on governance proposals. We are assuming that:

- Each agent holds a stake in the protocol through $GOV tokens.

- Agents can vote on various proposals that are put forward within the governance structure.

The proposal system in a decentralized protocol allows any participant to bring forward a proposal for changes or improvements to the protocol. Once a proposal is introduced, the token holders (agents) vote on whether to accept or reject it.

In this model, it is assumed that the agent who brings forward the proposal abstains from voting to remain neutral, allowing the rest of the participants to make the decision. The agents are then tasked with analyzing the proposal based on its impact on the protocol's overall health, security, or profitability.

For example, in a DeFi lending protocol, a typical proposal might suggest changing the risk parameters for collateral assets, such as requiring higher collateralization ratios to reduce risk. The agents must then decide whether or not to support the proposal, depending on their stake in the protocol and the broader market conditions.

In this model, each agent’s decision-making process is influenced by:

- The actions of other agents (who are assumed to behave similarly).

- Random chance variables such as market volatility.

- The agent’s expected utility (benefit) from voting for or against a proposal.

By representing the governance game through a MAID, it becomes easier to understand how different agents' voting decisions are interconnected and how they affect the final outcome of the vote. The MAID framework allows for a clear depiction of the strategic interactions between agents, making it possible to calculate optimal strategies for voting and analyze the protocol's governance structure more thoroughly.

Computation of Nash Equilibrium for a MAID

The central concept here is the Nash Equilibrium, where each agent chooses a strategy that maximizes their utility, given the strategies chosen by other agents.

In the context of decentralized governance, each agent makes decisions based on:

- The expected market conditions (e.g., market sentiment, volatility).

- The risk associated with the protocol’s collateral assets (e.g., how risky the collateral dynamics are).

Agents aim to maximize their utility (i.e., their financial gain or the protocol's health) while considering the actions of other agents. The Nash Equilibrium represents a point where no agent can improve their utility by changing their decision, assuming all other agents maintain their current strategies.

Example: Voting on Strengthening Risk Parameters

In this example, agents are voting on whether to strengthen the risk parameters of a decentralized finance (DeFi) protocol, such as reducing the borrowing limit for certain risky assets. The decisions are represented by:

- V = 1: Voting for strengthening the risk parameters.

- V = 0: Voting against the proposal.

Agents take into account two key factors:

- Collateral Dynamics (CR): Whether the collateral dynamics are risky (CR = 1) or stable (CR = 0).

- Market Sentiment (MS): Whether market sentiment is poor (MS = 1) or positive (MS = 0).

Using these factors, the agents compute the optimal strategy for voting:

- V = 1 (Vote in favor) if the collateral dynamics are risky (CR = 1) or the market sentiment is poor (MS = 1).

- V = 0 (Vote against) if both the collateral dynamics are stable (CR = 0) and the market sentiment is good (MS = 0).

This framework helps agents assess the potential risks and benefits of strengthening the protocol’s risk parameters, ensuring that their decision aligns with both individual and collective interests.

Expected Utility for Governance Agents

The expected utility (EU) for each agent in this governance game is a calculation of the financial benefits they expect to receive from their decisions. The formula for the expected utility as:

EU(σ)=0.25⋅(100+50+25+100)=68.75

This value of 68.75 represents the average utility an agent can expect from participating in the voting process, assuming different outcomes for market sentiment and collateral risk.

If voting power is determined by the amount of $GOV tokens an agent holds (stake-based voting), the expected utility would be proportional to their token holdings:

EU=($GOV owned by agent/$GOV total supply)*68.75

This means that agents with more governance tokens would have greater influence over the expected utility they receive from the protocol, aligning the voting outcome more closely with the interests of those holding the most tokens.

When voting power is proportional to the amount of $GOV tokens held, agents with larger stakes have a more significant impact on the protocol’s decision-making process. This dynamic can lead to scenarios where larger stakeholders have more control over governance outcomes, while smaller token holders have less influence.

The document emphasizes that this distribution of power needs to be carefully managed to ensure that governance decisions reflect the interests of the entire community, rather than being dominated by a few large stakeholders. MAIDs can be used to analyze how these dynamics play out and to design governance systems that maintain fairness and alignment among participants.

Multi-Agent Equilibrium

In this section, we shift focus from a general governance system with many participants to a simplified scenario involving just two agents. This simplified model is used to illustrate how strategic interactions between agents can be analyzed within a governance protocol. While larger systems can be more complex, analyzing two-agent interactions can provide meaningful insights into how incentives and behaviors might scale in larger populations.

The agents are:

- Agent 1 (A1): Represents one participant in the governance system.

- Agent 2 (A2): Represents another participant.

The agents in this model are assumed to be homogeneous, meaning they have identical decision-making processes, utilities, and chance variables. The assumption of homogeneity simplifies the analysis, allowing us to focus on strategic behavior without introducing additional complexity from differing agent behaviors.

Since the agents are homogeneous, the behavior of one agent (A1) directly influences the behavior of the other (A2). The optimal strategy for one agent is to mimic the strategy of the other agent. This leads to a collective action scenario, where both agents end up following the same strategy because it maximizes their expected utility.

- Utility of Agent 1 (U1)

- Utility of Agent 2 (U2)

Both agents share the same chance variables (𝜒), decision variables (D), and utility variables (U). This implies that their decisions will converge towards the same outcome, creating what is known as a multi-agent equilibrium.

The equilibrium in this system is determined by each agent choosing the best strategy, given the strategy of the other. In a homogeneous system, the Nash Equilibrium occurs when both agents select the same optimal strategy, leading to maximum utility for both.

For instance, if Agent 1 (A1) adopts a strategy of voting "yes" on a governance proposal, Agent 2 (A2) will find it optimal to vote "yes" as well. This is because their expected utility from following the same strategy is higher than deviating from it.

The results of this equilibrium indicate that in a two-agent system, where both agents act with the same information and incentives:

- Agent A2 (the follower) will mimic the decision of Agent A1 (the leader).

- Both agents adopt the same strategy, ensuring that the governance outcome aligns with their shared utility functions.

This concept is crucial in governance systems where it is important to maintain consistency in decision-making to prevent governance attacks or misaligned outcomes.

The collective action scenario is highlighted as an important feature of homogeneous agent systems. Since each agent's optimal strategy is to follow the same behavior as the other, there is a strong tendency towards uniformity in voting patterns. This can be beneficial for the protocol’s stability, as agents will collectively vote in favor of proposals that align with their shared goals, such as preserving the health and sustainability of the protocol.

However, this uniformity also introduces potential risks. If an adversarial agent disrupts the system, the collective action scenario may amplify the impact of the adversary’s behavior, as other agents may follow suit without realizing the malicious intent.

Application to DeFi Governance Protocols

The optimal strategy for any agent in the governance of a DeFi protocol is assumed to be promoting the protocol's sustainability. This is based on the idea that agents benefit when the protocol thrives, and they lose when the protocol fails. Thus, agents have an inherent incentive to vote in favor of decisions that ensure long-term success. The analysis assumes that if every agent acts optimally, the protocol should have no instances of governance manipulation or Governance Extractable Value (GEV).

Governance Extractable Value (GEV)

GEV refers to the value that can be extracted by malicious actors or insiders by exploiting governance decisions for their own benefit. This could involve passing proposals that enrich a few token holders at the expense of the broader protocol or exploiting loopholes in the governance process to extract value. GEV is analogous to Miner Extractable Value (MEV), but it occurs within the governance layer of decentralized protocols rather than the blockchain consensus layer.

However, if there is a deviation from the optimal strategy—such as agents acting out of self-interest rather than for the collective good—there may be opportunities for governance attacks. These attacks occur when certain agents manipulate voting outcomes to extract value or influence decisions in ways that harm the protocol.

Even in systems where the majority of agents are honest and aligned with the protocol’s objectives, deviations from optimal strategies can introduce vulnerabilities. For example:

- An agent with a large stake might vote in favor of proposals that increase short-term profits but harm the protocol in the long run.

- Some agents may collude to pass proposals that benefit only a select group of participants.

When incentive misalignment occurs, the collective action scenario that ensures the protocol’s stability breaks down. In such cases, the governance system becomes vulnerable to exploitative behavior.

One potential solution is ensuring that the governance system rewards agents for voting in favor of decisions that promote long-term sustainability, while punishing behavior that introduces risk or harms the protocol.

What Does This Mean for DeFi Governance Protocols?

For DeFi governance protocols, this analysis underscores the importance of designing systems where agents’ incentives are aligned with the protocol’s overall objectives. If the optimal strategy for every agent is to promote protocol sustainability, the protocol can avoid many of the vulnerabilities that lead to governance attacks and GEV. However, if incentive misalignments exist, there will always be a risk of governance manipulation.

To mitigate these risks, the document recommends using MAIDs to analyze governance protocols and ensure that agents’ behaviors are consistent with the long-term success of the system. This approach can help protocol designers identify potential weak points in governance and take steps to prevent attacks before they happen.

Introduction of Adversarial Agents

Until this point, the analysis has assumed that all agents in the governance protocol are homogeneous and aligned with the protocol’s best interests. However, in reality, governance systems often face attacks from agents with malicious intent who seek to disrupt the protocol or manipulate governance decisions for personal gain.

The adversarial agent, denoted as a′, has the same decision-making framework (chance variables and decision space) as the honest agents in the protocol, but with a distinct utility function. While honest agents aim to promote the protocol’s sustainability, the adversarial agent’s utility function is geared toward disrupting the system or extracting governance value at the protocol’s expense.

Disruption of the Protocol by Malicious Agents

The primary goal of the adversarial agent is to act in a manner that opposes the collective action of the honest agents. For example, in a proposal to strengthen risk parameters for a risky collateral asset, honest agents would typically vote “yes” (𝑑𝑎 = yes) to preserve the protocol’s long-term stability. However, the adversarial agent votes “no” (𝑑𝑎′ = no) to block the proposal, which could lead to increased risk and potential vulnerabilities within the protocol.

The introduction of adversarial agents in the model helps capture scenarios where governance is vulnerable to malicious voting behavior, such as:

- Sybil attacks, where an attacker uses multiple identities to influence governance decisions.

- Collusion among insiders, where a small group of participants manipulates voting outcomes for personal benefit.

Payoff Matrix for Honest and Adversarial Agents

- If both agents vote “yes” to support the proposal, the honest agent achieves a utility of +100, reflecting the positive outcome for the protocol’s sustainability.

- If the adversarial agent votes “no,” they disrupt the system and achieve a utility of +100, as they benefit from blocking the proposal and potentially increasing the protocol’s vulnerability.

- The honest agent still votes “yes” because that is the optimal strategy for protocol sustainability, but their utility decreases due to the adversarial interference.

The adversarial agent’s dominant strategy is to vote against the proposal, while the honest agent’s dominant strategy is to vote for the proposal, regardless of the adversarial agent’s actions. This creates a situation where the two agents are in conflict, with the adversarial agent trying to destabilize the protocol while the honest agent seeks to preserve it.

Expected Utility and Dominant Strategies in Adversarial Scenarios

In a system with adversarial agents, the expected utility for honest agents can decrease due to the disruptive actions of the malicious participants. However, if the majority of agents are honest, the protocol can still make the correct governance decisions.

- Honest Agents: Their utility is maximized when they vote for proposals that promote protocol sustainability.

- Adversarial Agents: Their utility is maximized when they vote against such proposals to disrupt the protocol.

- Dominant Strategy for Adversarial Agents: Always vote “no” on proposals that benefit the protocol.

- Dominant Strategy for Honest Agents: Always vote “yes” to protect the protocol’s sustainability.

The introduction of adversarial agents highlights the importance of maintaining a majority of honest participants in governance protocols. Governance systems should be designed to ensure that:

- Honest agents hold the majority of voting power.

- Incentives are aligned to discourage malicious behavior.

- Mechanisms are in place to identify and mitigate governance attacks before they can cause significant harm.

By using the MAID framework, protocol designers can analyze potential attack vectors and create governance systems that are more resilient to adversarial behavior.

Conclusion

The application of Multi-Agent Influence Diagrams (MAIDs) presents a powerful and structured method to analyze and secure decentralized governance protocols. As decentralized finance (DeFi) systems continue to grow, their governance models must evolve to handle the increasing complexity and diversity of participant behaviors. MAIDs provide a game-theoretic framework to formalize and verify agent incentives, ensuring that the decision-making process remains aligned with the long-term goals of the protocol. Through MAIDs, protocol designers can identify such vulnerabilities and develop systems that promote collective action, ensuring that the majority of honest agents drive governance in a positive direction.

.svg)

.svg)

.svg)